Imagine one morning your car just refuses to start because it is feeling depressed. Or it performs badly on the road not exceeding 60 km/h. You would most probably be like “B*tch please. What The F*ck is wrong with you. Get the f*ck moving!!!”

Emotions are sometimes considered as a negative thing especially for heart broke people. Brilliant students fail their exams. Neways, let’s make a hypothesis if machines would be more performant with “emotions”.

The “Sad” Car

Same thing applies for a car. If you just press the accelerator down most of the time, you’re definitely wearing down the parts if you don’t do proper maintenance with it. If the car knew that it’s owner doesn’t “love” him, it would have not ran fast. It would go on an average speed like 60-80km/h max.

Advantages:-

1. Car wear is reduced. Hence heavy maintenance cost is reduced. $$$

2. A driver who goes fast on a probably worn on car is a serious security risk both for the car and driver.

When the car “cares” about itself, the driver also travels safer.

The “Happy” Car

If car is a happy, it knows it can push it’s limit to the maximum. It has a “trust” relationship with its owner. IF you let your wife drive, the it would be great if the car would go into “scared” state behave accordingly. I think you got the point 😛

Modelling Emotions using Fuzzy Logic

I’m supposed to learn Fuzzy Logic for my Final Year Project. I’ll try to make a tiny demo. For non-techical people, you might just skip to The Evil Playboys section.

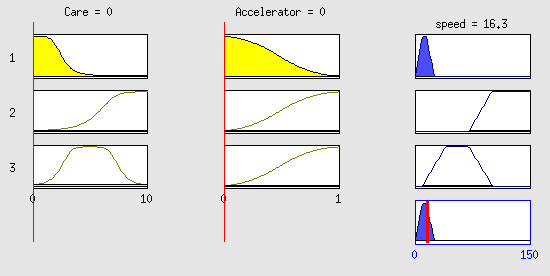

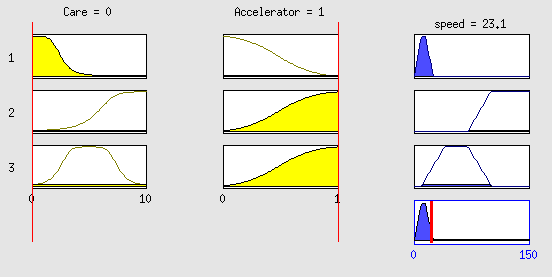

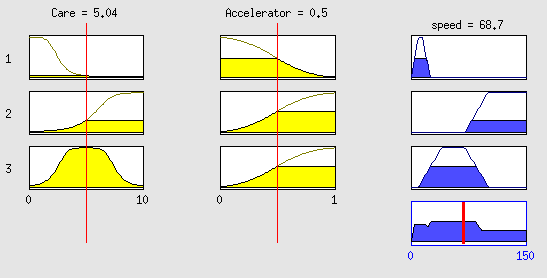

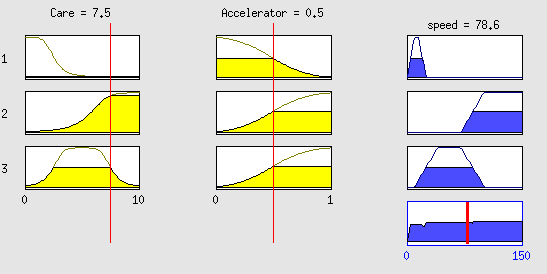

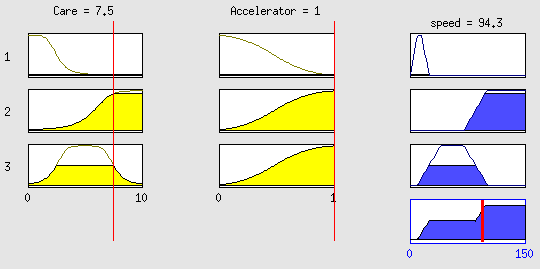

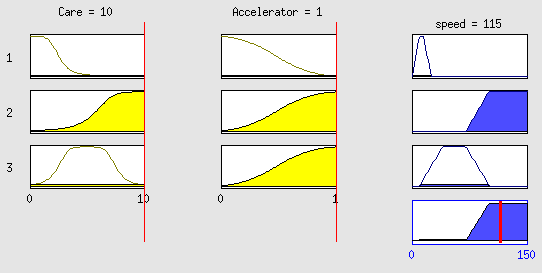

Let’s make a tiny simulation of such a car. We will have 2 input variables namely “care” which will be between 0 – 10. Accelerator will be between 0 – 1. Speed will be between 0 – 150. Did that in Fuzzy Logic Toolbox in 30 mins. Not that good but quite usable.

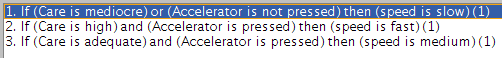

The rules:-

Case 2: Trying to push the car

Case 3: Moderate care, moderate accelaration.

Case 4: Increasing care, same acceleration

Case 5: Pushing own accelerator fully

Case 6: Perfect car with full accelerator pressed

The Evil Playboys

Ofcourse. Some people might use it for their own benefits and “play with emotions” of others. Giving the machines false “pats” and turning off their sensors or hacking into the system to make the “happy” value higher would surely increase the productivity on the short term. But at the end, it always results in broken hardwares and <3. What do you think?