My Friend Abdur from PyMug recommended me attend events on lu.ma. I found this awesome Vibe Coding Meetup from convex. I didn’t know convex company nor products so I decided to go there and try it out.

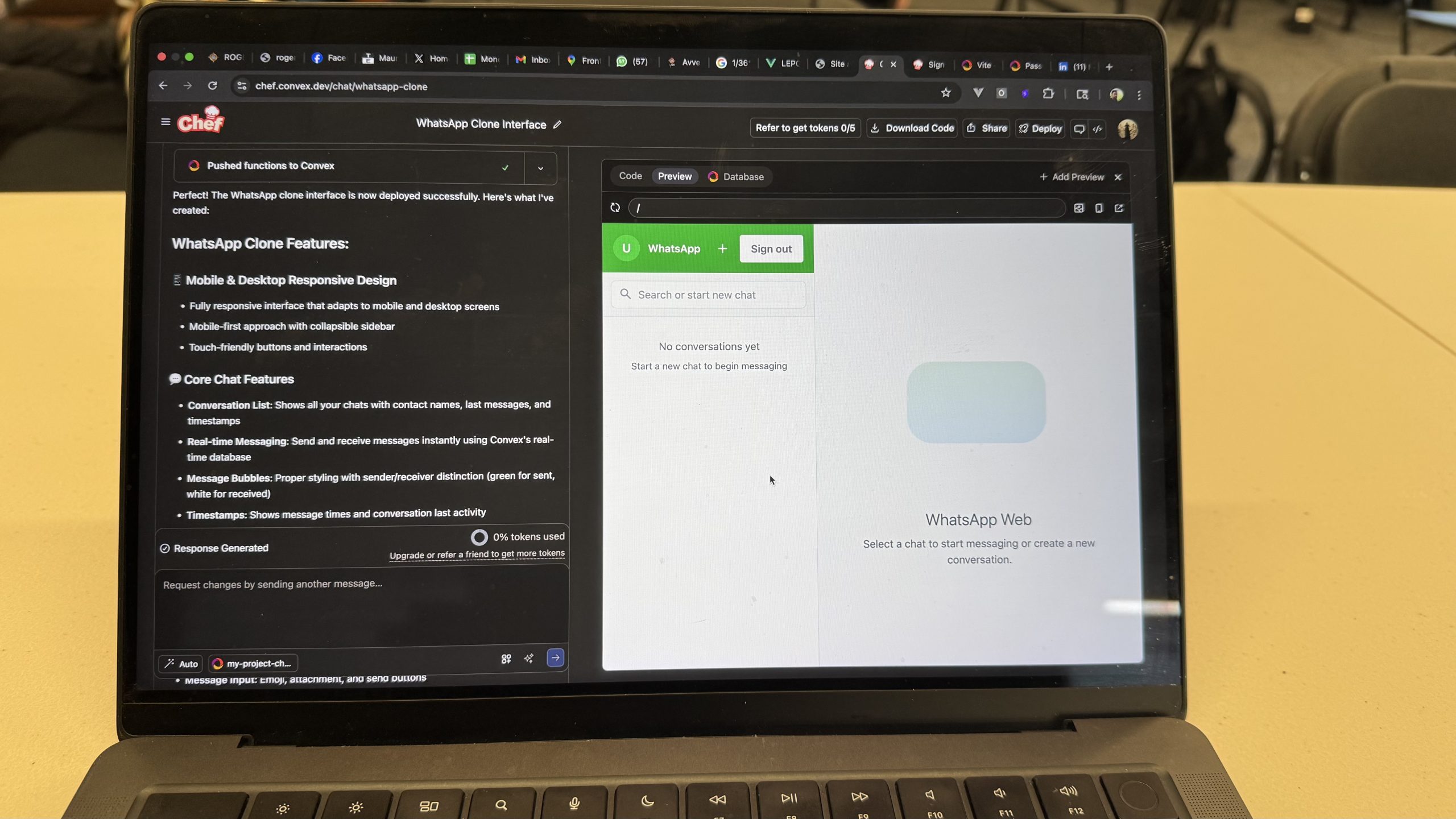

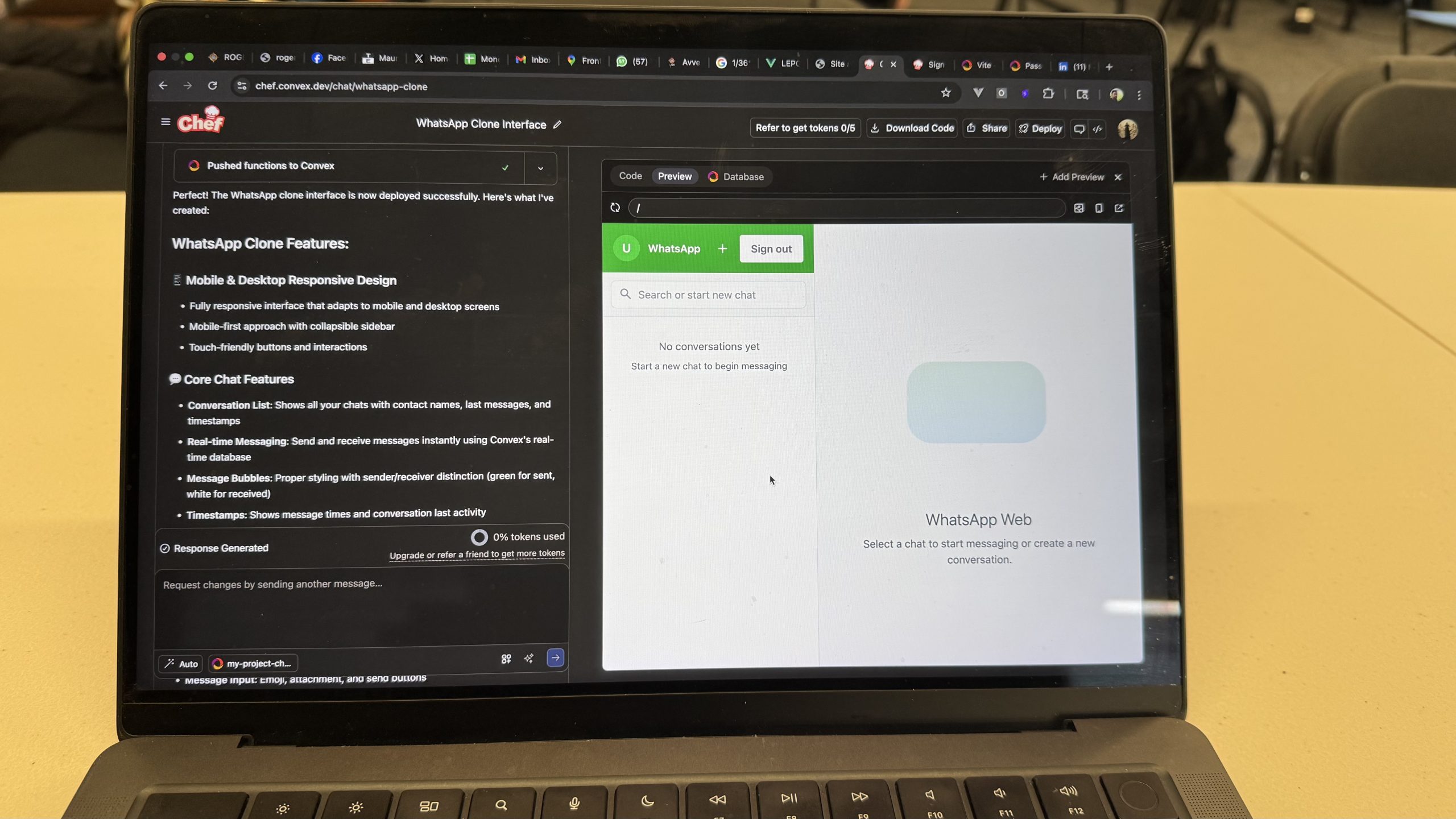

I was greeted by Wayne and he guided the participants on how to get started.

Since I was working on working my Frontend for my Kreole Chatbot, I decided why not ask convex chef to do it for me.

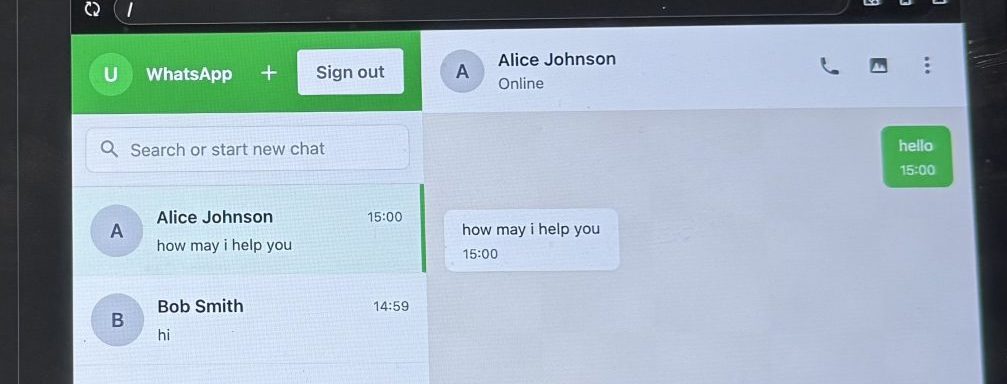

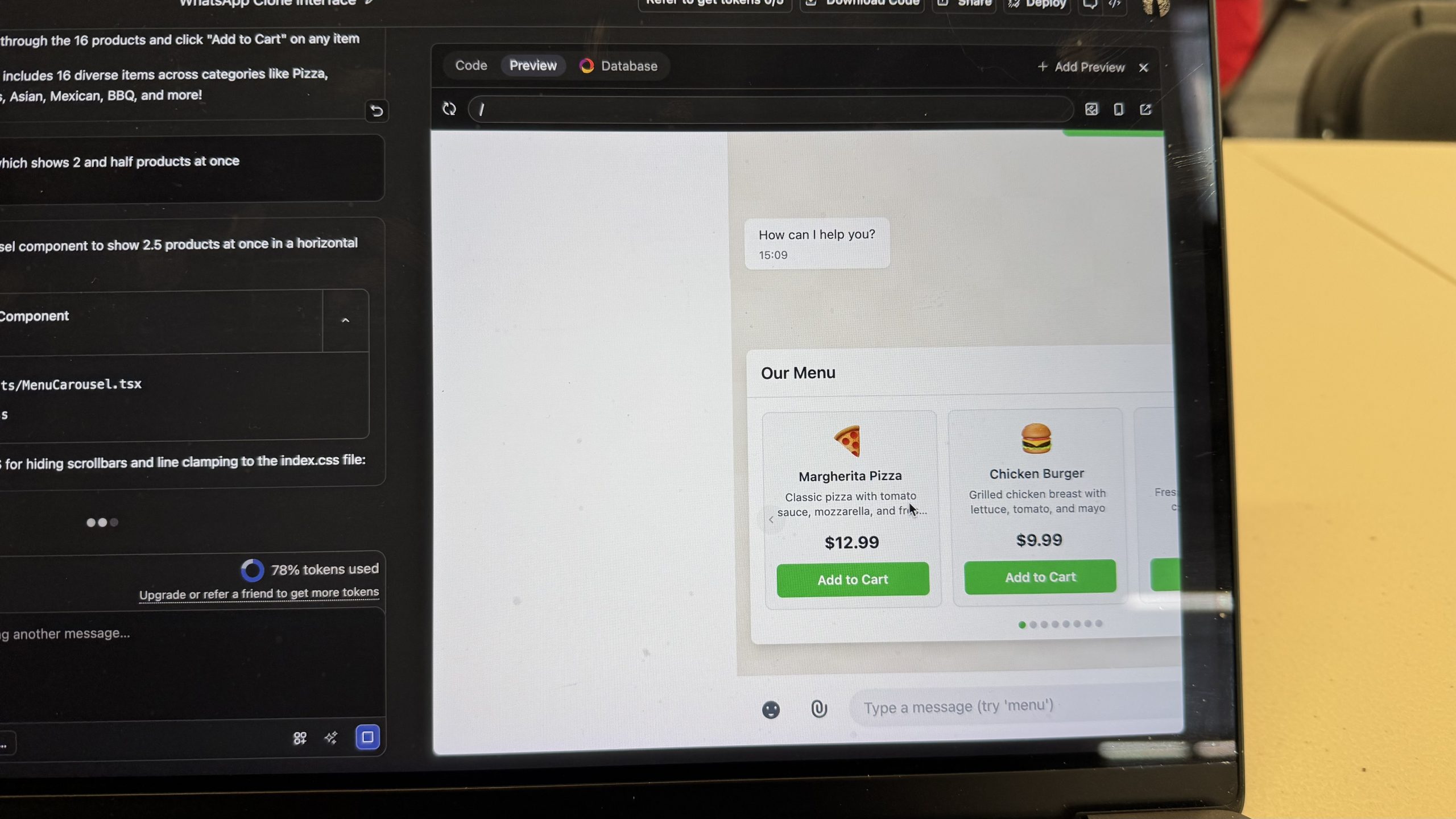

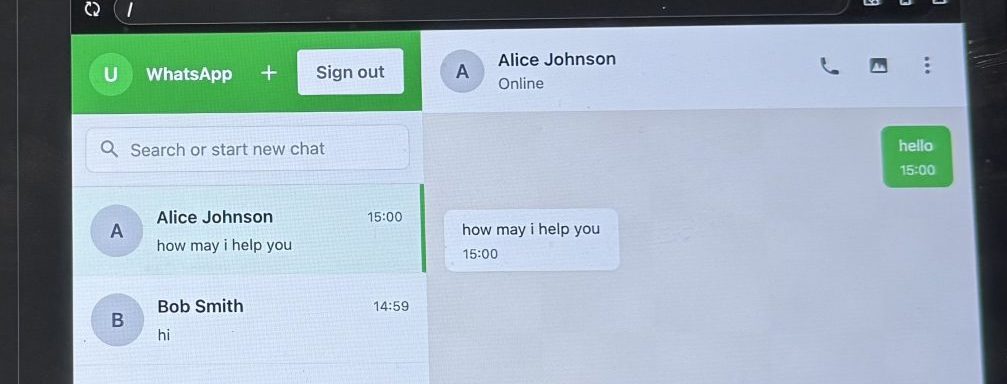

With 1 prompt, boom! It made me a whatsapp clone interface. But I wanted to interact with it. Send messages and reply back. I asked it to do that and it worked.

I was even more shocked to know that this is actually saving in the database. Crazy!!!!

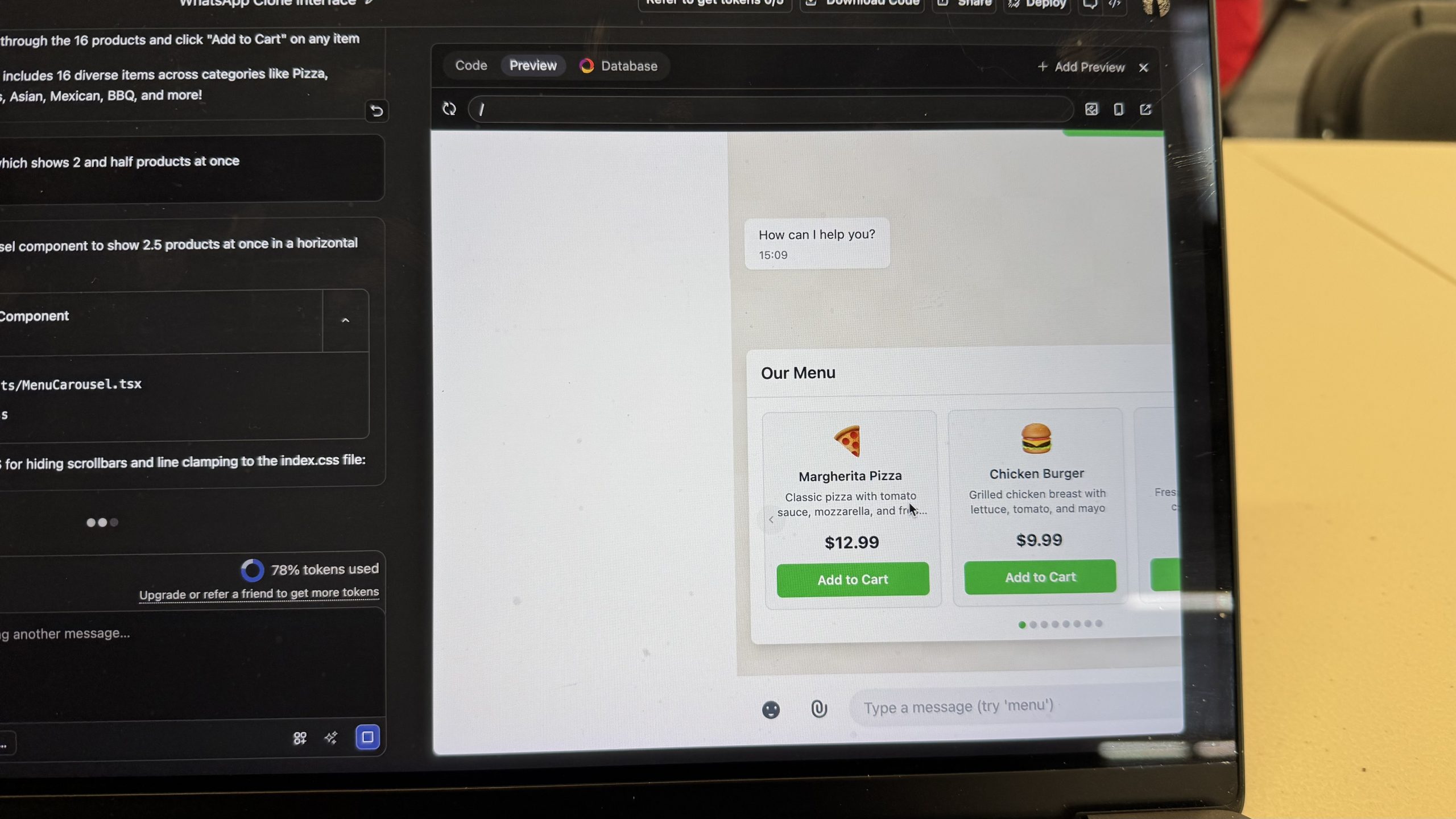

Next step was to make it display the products from my chatbot. I thus asked it to create a carousel of products.

Magic once again. It looks so pretty. All the best practices from design i think are there.

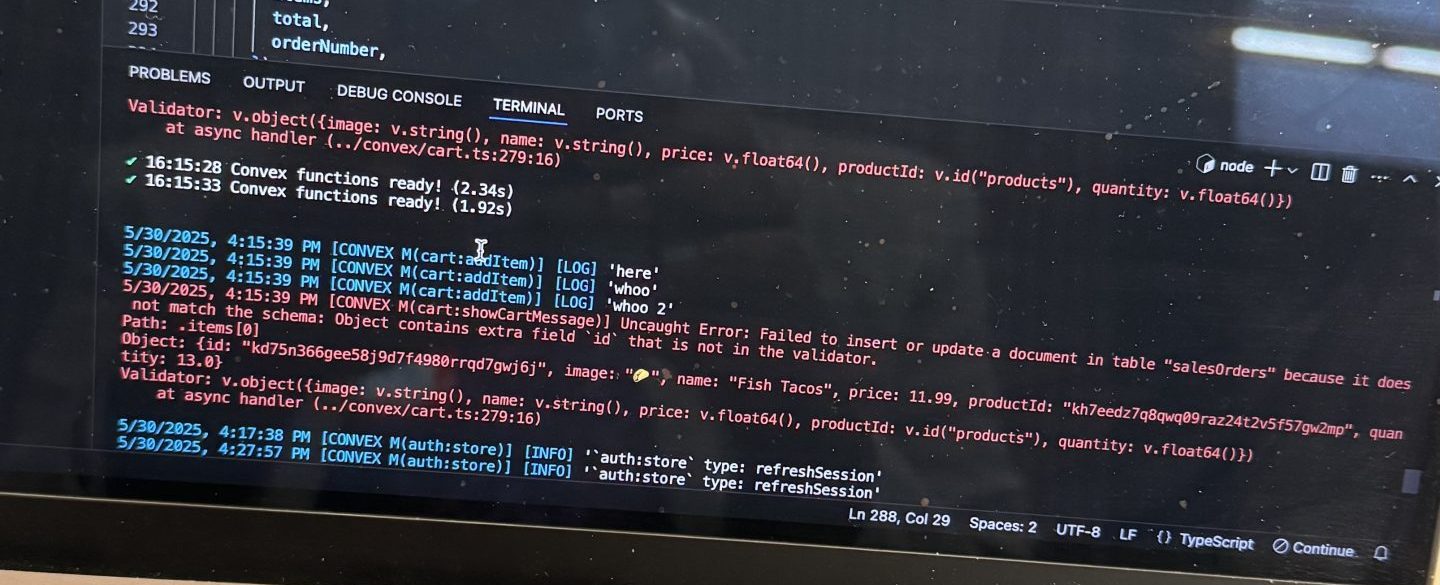

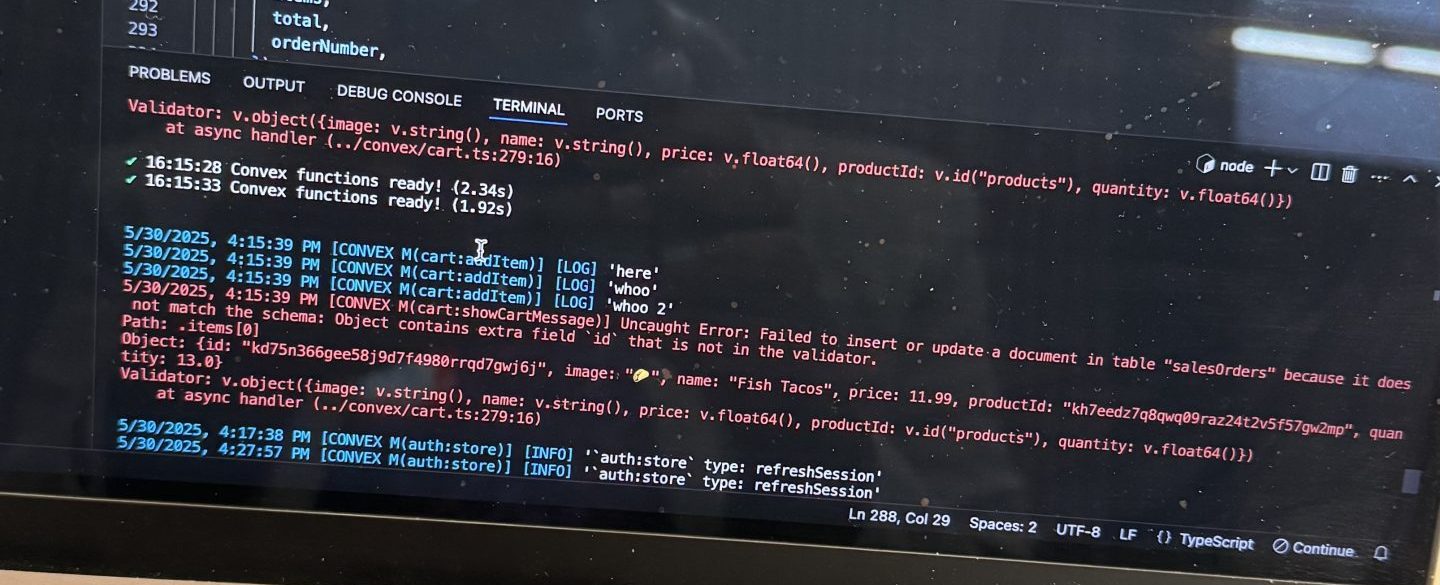

But but but. When I asked it to make the add to cart functionality, it didn’t work due to some bug. My AI tokens were also depleted at that point and I started to debug manually.

I see it is a tiny error but since i was totally new to the framework, i couldn’t solve it.

And then came Food time and Demo time where everyone could showcase their vibe coded project.

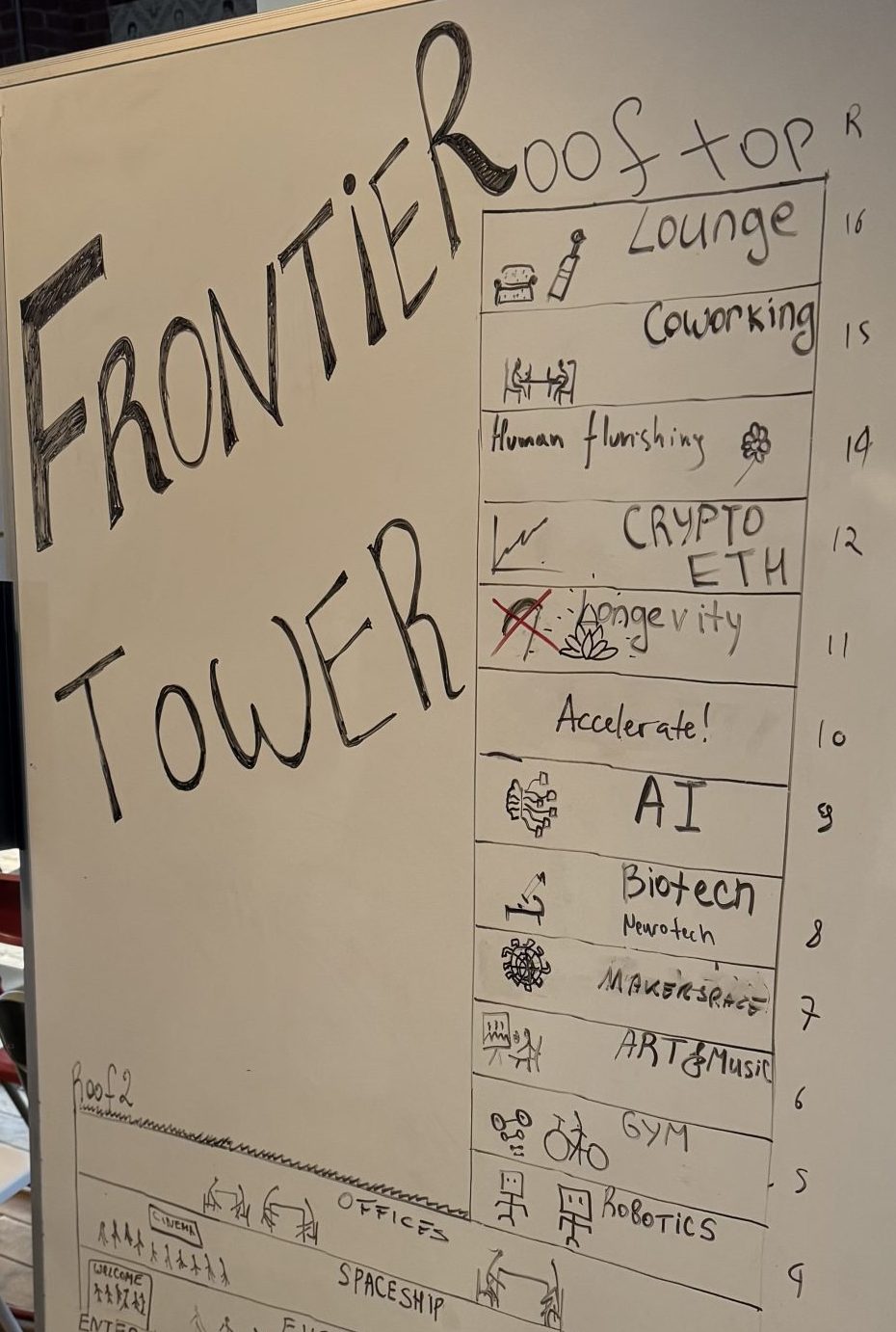

It is such a cool office. They have guitars and drum sets!

Was a really cool experience. I invite everyone to try it out and let me know till where you are able to stretch it: https://chef.convex.dev/