I recently bought a used PlayStation 4 for some casual gaming. Is the PS 4 outdated? It was first released late 2013 i.e. 6 years ago. Is it still worth getting? Let’s see.

Price

First and foremost let’s talk about price. A brand new PS 4 slim can be bought brand new between Rs 12,500 – Rs 19,000 with 1 game included in Mauritius. The PS4 Pro starts at Rs 18,000 to Rs 22,000. However used ones can be found on Facebook groups ranging from Rs 8,000 to Rs 10,000 for the non-pro PS4 version only.

Building an equivalent PC to the non-pro PS 4 would start as Rs 25,000 minimum. If we were to build an equivalent of PS4 Pro, we’ll need a budget of at-least Rs 60,000.

If you no longer have the need for the PS in the future, you can always sell it on Facebook.

Performance and Graphics quality

You can be sure that any game released for the PS 4 would run butter smooth no matter what year the game is released. This is because game developers have optimized the game to deliver certain frames per second while keeping a decent graphics quality.

The game developers know very well the power as well as the limitations of the PS 4 hardware. It’s not possible to do same on PCs because the hardware can be of a lot of different combinations.

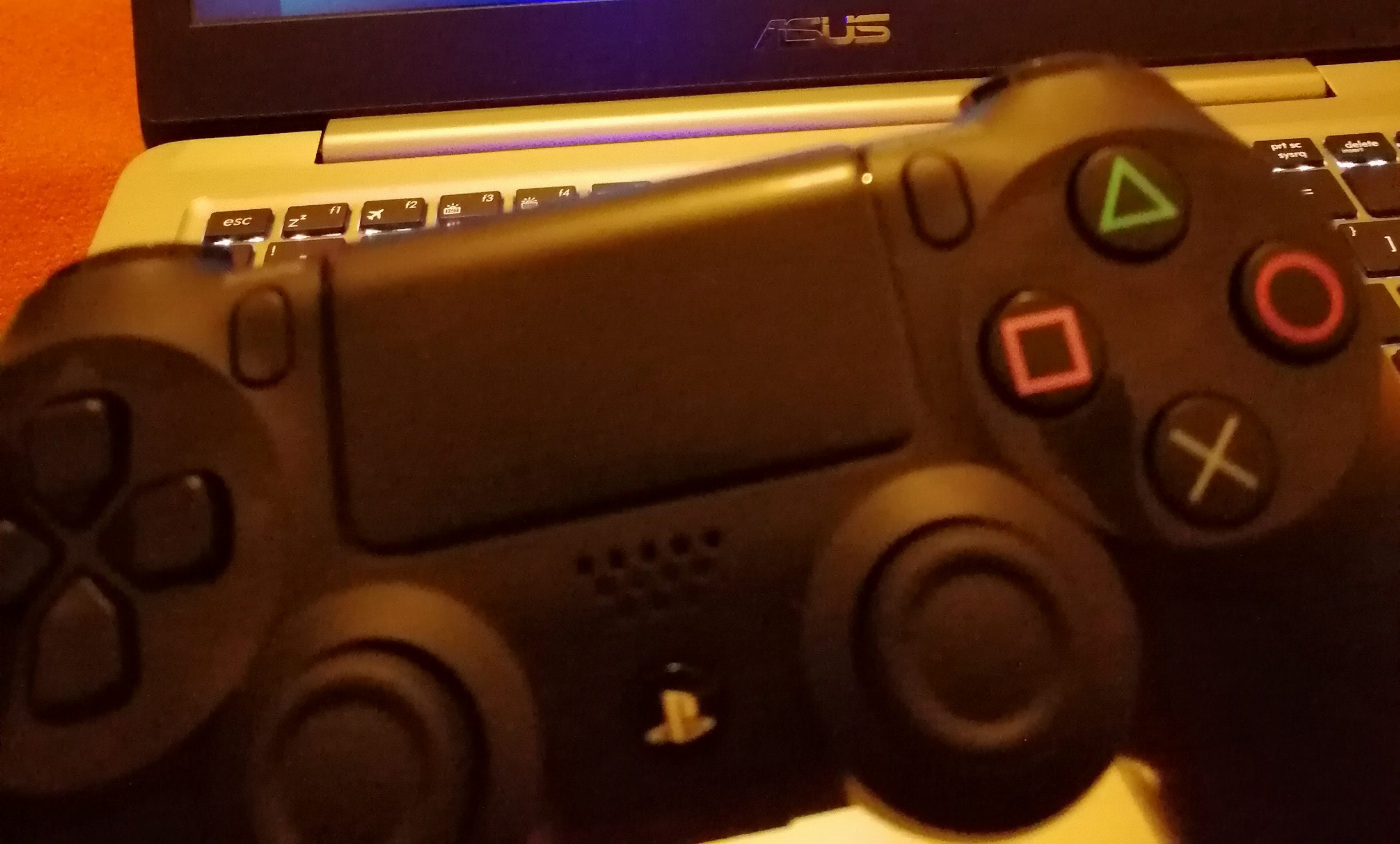

The Controller: DualShock 4

As someone who has only used mouse and keyboards my whole life, the DualShock controller feels like experience a state-of-the-art technology. It has the following features:

1. Vibration

Whenever you’re getting shot or driving off-road, the vibration feedback brings an awesome gaming experience. The vibration can be very powerful sometimes.

2. Pressure-sensitive Buttons and Analogue Sticks

Keyboard presses are binary. If you are playing a racing simulator game on a PC, the arrow keys would make the car accelerate and turn to the maximum level each time you press. On the DualShock 4 Controller however, you can just slightly steer the car or apply brakes gently to make a perfect cornering manoeuvre.

3. It has a Trackpad too

But it’s not always linked to the cursor on the screen. I guess the game developers don’t use it often in there games.

4. Accelerometer included

I discovered this feature when in text-input mode, you can also move the cursor on the screen by swinging the controller. The games themselves don’t seem to be using this feature a lot.

5. Audio jack

The 3.5 mm audio jack is one of the best feature of the wireless controller. You can sit back on your couch late at night playing games on the TV without causing inconvenience to other users of the house.

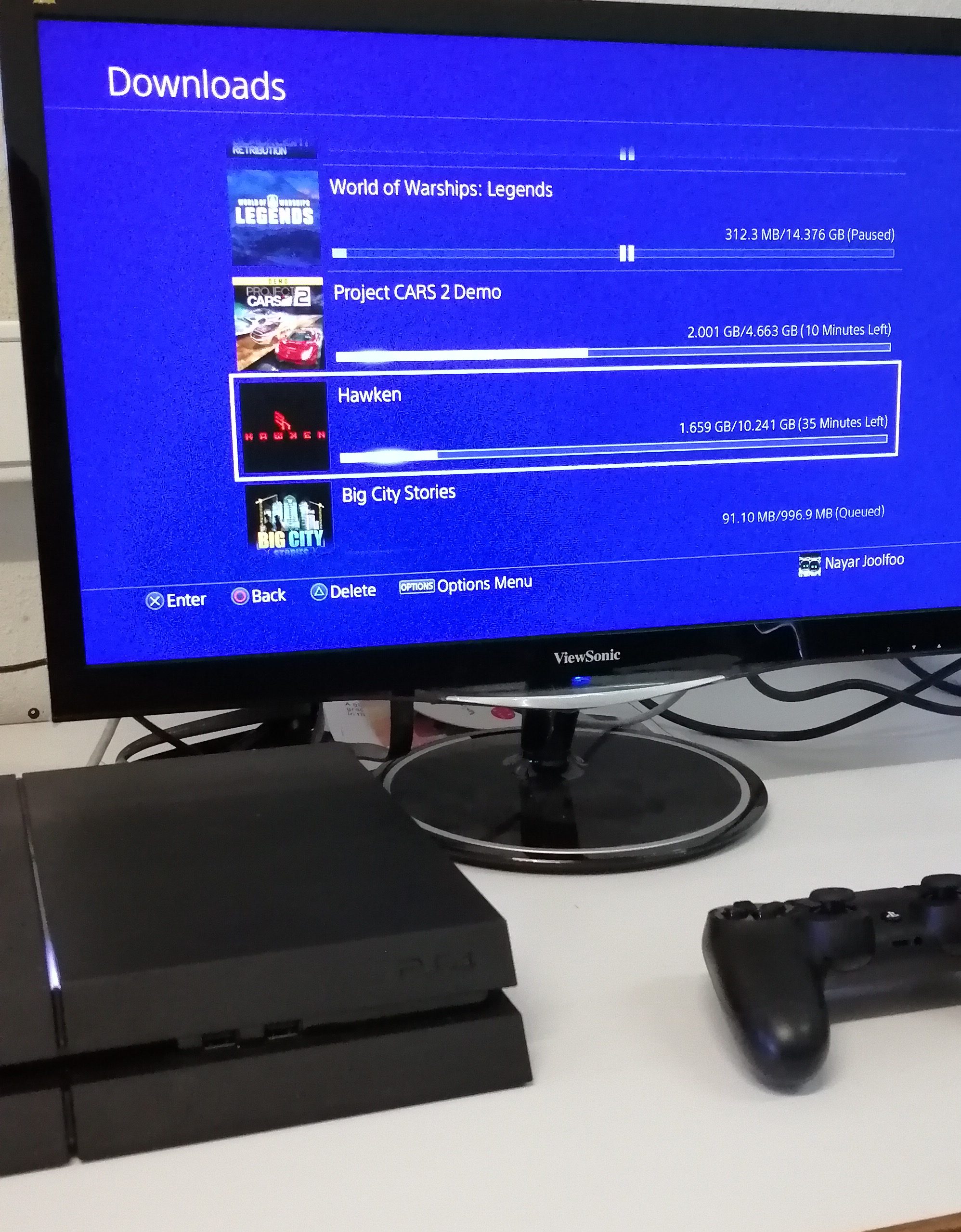

Games Availability and Playing Online

Games will keep getting released for the PS 4 until PS 5 actually penetrates the market the following years. You can have plenty of fun now. And there are lots of Free-to-play games on the Store which are of really good quality e.g. Armoured Warfare, H1Z1, Warface, Crossout etc. These free games don’t require a PlayStation Plus subscription to play online. What can you ask for more?

And yes, to play online in other games, you’ll have to buy PlayStation Plus subscription which would require you to have a budget dedicated to maintain it.

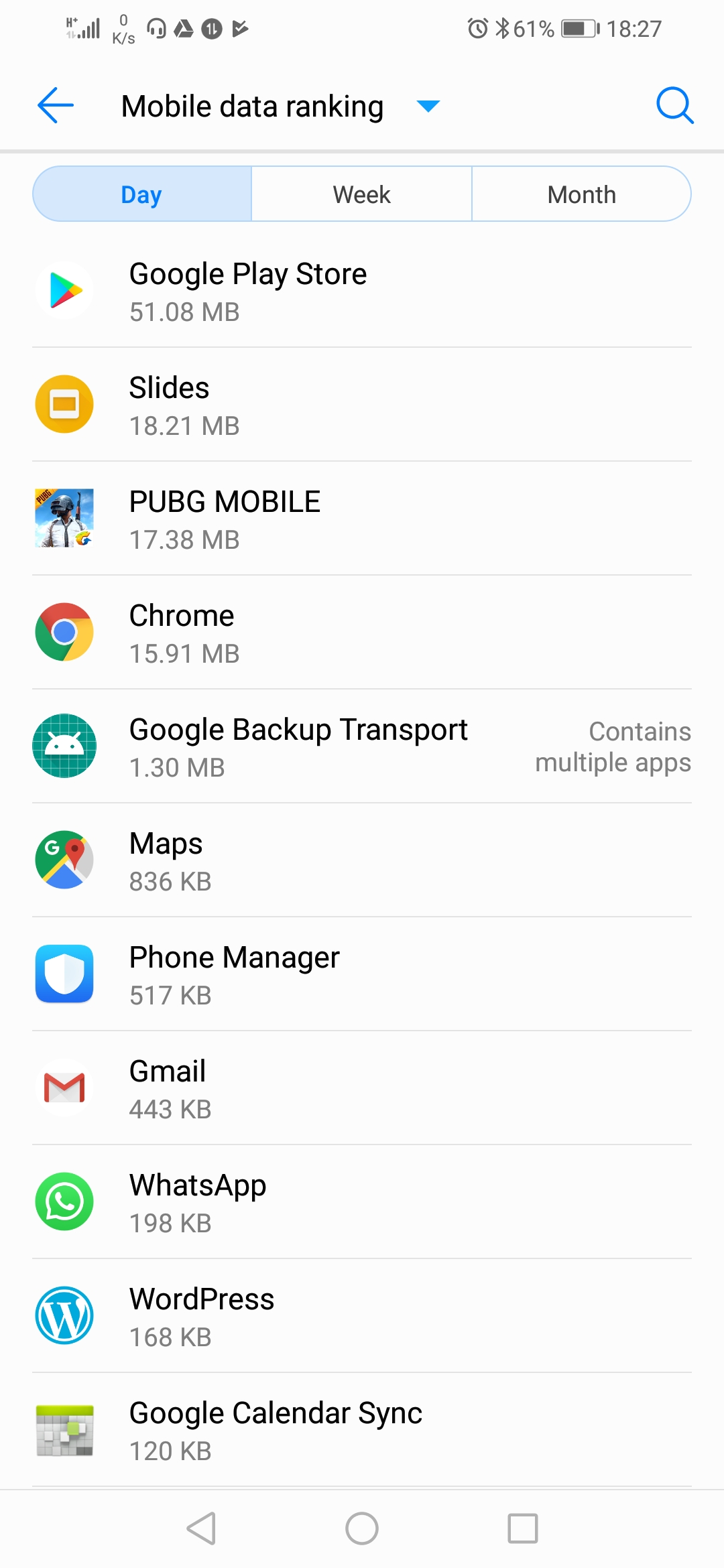

Power Consumption

The PlayStation is not very power efficient. Just keeping it idle on the main menu costs around 70 Watts of power. I mean why? That’s too much for a system doing nothing! Playing a YouTube video will consume around 100 W. And on top of that, we have to add the power consumption of the TV which can range from 70 W to 200 W.

A Raspberry Pi or a Chromecast or an Android TV box would not draw more than 6 W for playing a YouTube video. I really wouldn’t recommend the PS 4 for non-gaming applications such as TV Media Centre.

And that’s it for now. What’s your favorite PS 4 game? I’m looking for a Tomb Raider game to buy right now.