You write a script in python which has to read a file, loop through each line to do some work. Seems easy right?

f = open('loulou.txt', 'r')

lines = f.readlines()

for line in lines:

print line

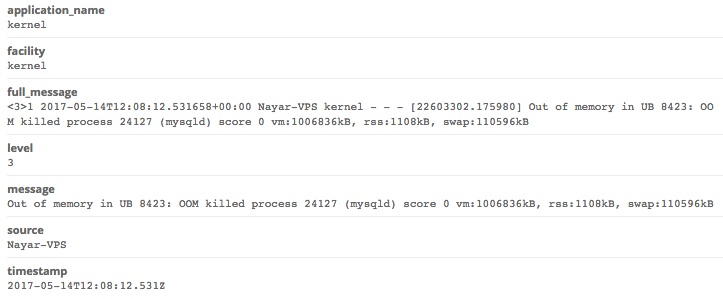

Problem is when your file becomes big, let’s say 1500MB, the code will still run on your development laptop but it will fail on a server on cloud with 1GB of RAM. You’ll have an Out Of Memory error which can lead to some very important process being killed if OOM priorities are not mastered.

You can use the Collectiva service by NAYARWEB.COM to get alerted near-realtime whenever Out of Memory occurs on one of your servers.

To fix the code above, you can read the file line by line from the disk:

f = open('loulou.txt', 'r')

for line in f:

print line

Is this always better? No. The current version will run slightly slower than the first code depending on your storage backend.

It’s amazing how little differences like these can have a huge impact on production servers.

Happy Monitoring to all System Admins out there 🙂