Terms:-

B : byte = 8bits

Mb: megabit = 1024 * 1024 bits

MB: megabyte = 1024 * 1024 bytes = 1024 * 1024 * 8 bits

GB: gigabyte = 1024 MB

Been more than a month now since been using Orange’s (Mauritius Telecom) fibre connection with 10Mbps speed i.e. you downloaded at 1MBps. Since I usually use peer to peer download method (Torrents), I can say if there are enough peers, most of your downloads will be at that speed. If you use file sharing websites, then a speed limitation might come from them 😉

10Mbps is more than enough for me. My HD video contents download faster than I can watch. A 700 MB Ubuntu ISO takes 10 minutes to download. A 1400 MB 1080p .mkv video takes about 20 minutes to be downloaded. I can download sequentially to watch as it downloads. Why should I want a 100Mbps connection right now?

The Catch?

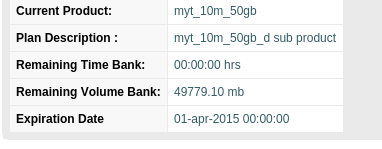

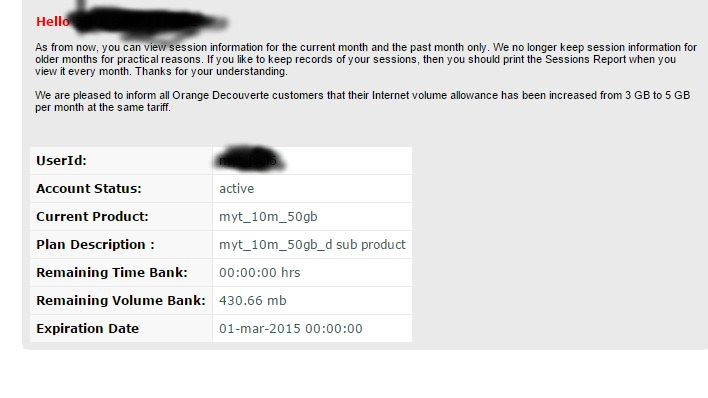

The 10Mbps is only applicable to the first 50GB traffic. Yes. Both downloads and upload in that. Think at the beginning of the month, I saw about 54GB in the customer section on Orange’s website. But that’s it. Being a responsible torrent user is harder. I usually seed to 1.10 ratio. I have to wait when the 50GB is over to seed unlimited till the end of month.

50GB is used up in 15 days.

My mom and sister are heavy “youtubers”. I had to tell my mom yesterday to limit watching her serials to extend the high speed time. 50GB is really not enough for a regular family usage.

No 2Mb/s for local and youtube when package is over

When your 50GB ends, you go down to 1Mbps. Seriously? Make it to 4Mbps atleast!

WiFi weaker

I noticed the WiFi signal is a bit weaker than the previous ADSL modem. Anyone else noticed this?

Online Gaming

I usually play League of Legends online. Found my ping to be almost same. Nothing to note here.

Conclusion

10Mbps is the perfect speed to have in 2015. But the data limit are a deal breaker. It is torture. You don’t feel “free” anymore.

You start wondering whether it is really important to download this right now. Think I would have preferred to have 5Mbps unlimited throughout the month rather than 10Mbps for 50Gb then 1Mbps afterwards. Anyone with the same opinion? Ideally, we should all have 10Mbps unlimited!